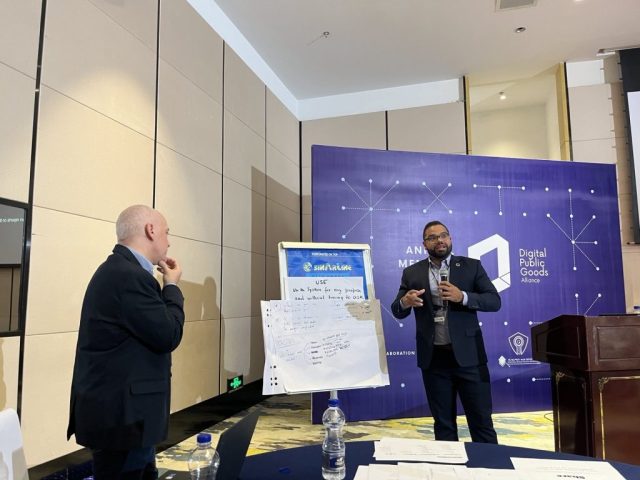

DPGA members engage in Open Source AI Definition workshop

The meeting of the Digital Public Goods Alliance (DPGA) members in Addis Ababa was very informative. The OSI led a workshop to define Open Source AI and joined the subsequent presentation by the AI Community of Practice (CoP) of the DPGA. There were about 40 people in the room, split into seven groups of 5-6 people each. They were asked to review individually the four basic elements of the draft Open Source AI Definition and provide suggestions. Few people were familiar with developing AI systems and almost no lawyers. In the audience there were mostly policy makers and DPG product owners (not developers.)

Results of the Open Source AI workshop

There was a fair amount of agreement that the wording as illustrated was fairly good but required some tweaks. Most of the tables were eager to widen the scope of the Definition to include principles of ethics.

Some of the most notable comments:

- In order to study the AI system it must be possible to understand the design assumptions behind the AI system and another group suggested adding a reference to explainability of its outcomes.

- One group highlighted that the purpose of studying the AI system is to gain confidence, understand the risks it poses, its limits and provide a path to improve it. They recommended a more extensive wording to clarify that being able to inspect its components (datasets, assumptions, code, etc) is important. They also added that data is not strictly necessary to be fully made available, putting privacy as one reason.

- On the “modify” question, the group suggested simplifying the wording, replacing … with “outputs”.

- On “sharing”, the group recommended to limit the shareability to responsible purposes, extending the scope of their recommendations also to the Use.

- There needs to be a fifth principle to “do no harm”

A surprising outcome came from a group that felt that the verbs (study, use, modify, share) in the draft definition are not sufficient and new ones are necessary for AI. They brainstormed and came up with an initial list: train, tend (curate and store), evaluate (its capabilities) and evoke the model. This was a fascinating conversation that I promised to continue with its main proponent.

The comments received gave me a chance to close the workshop explaining why the Open Source Definition doesn’t prescribe respecting the law and avoids discussing ethics and why the OSI recommends moving these issues outside of licenses and into project governance and policies.

It was energizing to see DPGA members having such a good opinion of Open Source and its power to be positively transformative that people want it to do more good with it. But injecting ethical principles into open definitions overloads them massively. The OSI will have to do more to explain that a definition should no more be dictating “acceptable use” than Meta, Alphabet or anyone else. Ethical considerations are highly contextual and there are rarely clear answers that a universal standard like the Open Source Definition and the future Open Source AI Definition cannot reasonably cover. The DPG Standard, on the other hand, is a more suitable document to include ethical considerations because it’s more contextual to deployment of technologies.

Notes from the Community of Practice meeting

The second half of the afternoon saw the Community of Practice on AI systems as digital public goods, co-hosted by the DPGA and UNICEF. They showed their first approach to distinguish the degrees of openness of an AI system’s components. The CoP has a very difficult task with two major obstacles. The first is they have to come up with a proposal to update the DPG Standard to cover AI before a well established definition of Open Source AI exists. The second is that they need to look at the intersection of responsible and open AI, balancing the values of “open” with a set of risks that are not yet fully understood either. All while technology evolves rapidly and the AI business ecosystem spreads FUD in all directions.

I’ve been highly skeptical about this gradient approach, which is not too different from what Irene Solaiman at Hugging Face proposed. As someone in the audience said: Introducing a gradient approach for DPG AI risks creating an opening to also have a gradient for software, diluting the mandate for Open Source software in the DPG Standard. With the race to create “quasi-open-source” licenses, the threat is too real to dismiss.I believe that Open Source AI can be as binary as Open Source software and the way to achieve that is to look not at the individual components of AI systems but at the whole. The next phase of OSI’s work on the Open Source AI Definition will explore exactly this aspect, diving deeper into practical examples. What do I need in order to study, use, share and modify something like LAIoN’s Open Assistant?

Reposts

Likes