OSI at PyCon US: engaging with AI practitioners and developers as we reach OSAID’s first release candidate

As part of the Open Source AI Definition roadshow and as we approach the first release candidate of the draft, the Open Source Initiative (OSI) participated at PyCon US 2024, the annual gathering of the Python community. This opportunity was important because PyCon US brings together AI practitioners and developers alike, and having their input regarding what constitutes Open Source AI is of most value. The OSI organized a workshop and had a community booth there.

OSAID Workshop: compiling a FAQ to make the definition clear and easy to use

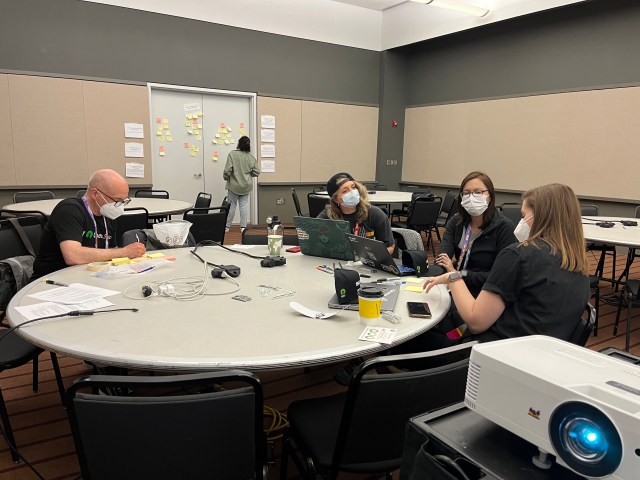

The OSI has embarked on a co-design process with multiple stakeholders to arrive at the Open Source AI Definition (OSAID). This process has been led by Mer Joyce, the co-design expert and facilitator, and Stefano Maffulli, the executive director of the OSI.

At the workshop organized at PyCon US, Mer provided an overview of the co-design process so far, summarized below.

The first step of the co-design process was to identify the freedoms needed for Open Source AI. After various online and in-person activities and discussions, including five workshops across the world, the community identified four freedoms:

- To Use the system for any purpose and without having to ask for permission.

- To Study how the system works and inspect its components.

- To Modify the system for any purpose, including to change its output.

- To Share the system for others to use with or without modifications, for any purpose.

The next step was to form four working groups to initially analyze four AI systems. To achieve better representation, special attention was given to diversity, equity and inclusion. Over 50% of the working group participants are people of color, 30% are black, 75% were born outside the US and 25% are women, trans and nonbinary.

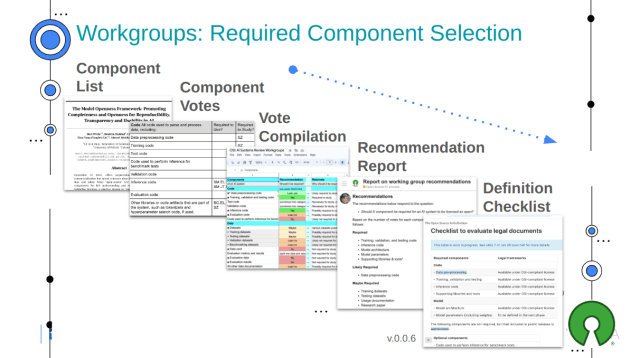

These working groups discussed and voted on which AI system components should be required to satisfy the four freedoms for AI. The components we adopted are described in the Model Openness Framework developed by the Linux Foundation.

The vote compilation was performed based on the mean total votes per component (μ). Components which received over 2μ votes were marked as required and between 1.5μ and 2μ were marked likely required. Components that received between 0.5μ and μ were marked likely not required and less than 0.5μ as not required.

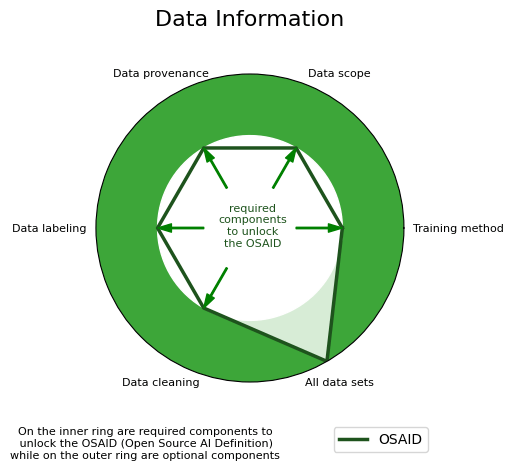

The working groups evaluated legal frameworks and legal documents for each component. Finally, each working group published a recommendation report. The end result is the OSAID with a comprehensive definition checklist encompassing a total of 17 components. More working groups are being formed to evaluate how well other AI systems align with the definition.

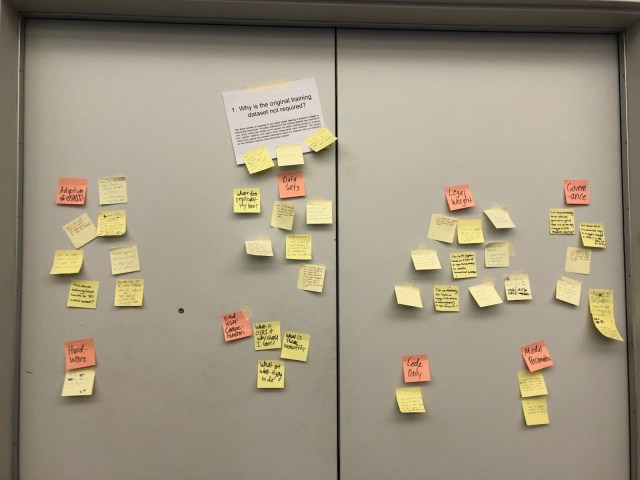

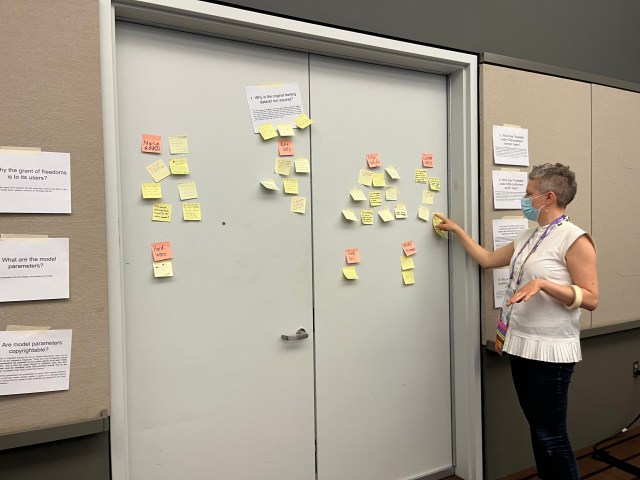

After providing an overview of the co-design process, Mer went on to organize an exercise with the participants to compile a FAQ.

The questions raised at the workshop revolved around the following topics:

- End user comprehension: how and why are AI systems different from Open Source software? As an end-user, why should they care if an AI system is open?

- Datasets: Why is data itself not required? Should Open Source AI datasets be required to prove copyright compliance? How can one audit these systems for bias without the data? What does data provenance and data labeling entail?

- Models: How can proper attribution of model parameters be enforced? What is the ownership/attribution of model parameters which were trained by one author and then “fine-tuned” by another?

- Code: Can projects that include only source code (no data info or model weights) still use a regular Open Source license (MIT, Apache, etc.)?

- Governance: For a specific AI, who determines whether the information provided about the training, dataset, process, etc. is “sufficient” and how?

- Adoption of the OSAID: What are incentives for people/companies to adopt this standard?

- Legal weight: Is the OSAID supposed to have legal weight?

These questions and answers raised at the workshop will be important for enhancing the existing FAQ, which will be made available along with the OSAID.

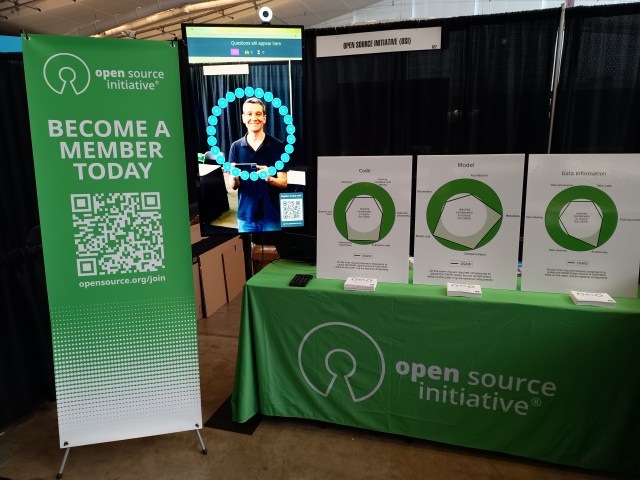

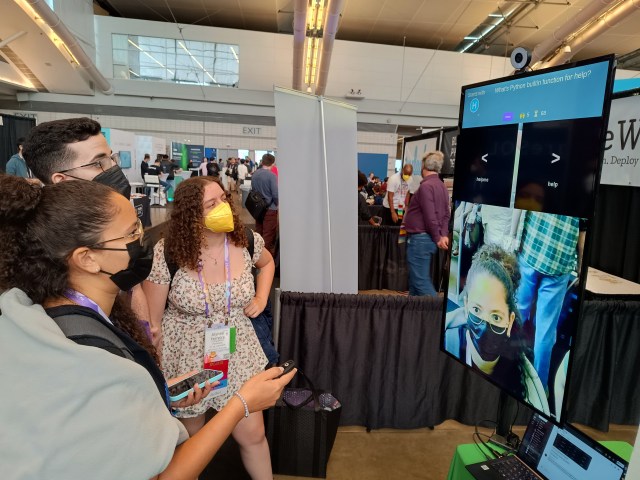

Community Booth: gathering feedback on the “Unlock the OSAID” visualization

At the community booth, the OSI held two activities to draw in participants interested in Open Source AI. The first activity was a quiz developed by Ariel Jolo, program coordinator at the OSI, to assess participants’ knowledge of Python and AI/ML. Once we had an understanding of their skills, we went on to the second and main activity, which was to gather feedback on the OSAID using a novel way to visualize how different AI systems match the current draft definition as described below.

Making it easy for different stakeholders to visualize whether or not an AI system matches the OSAID is a challenge, especially because there are so many components involved. This is where the visualization concept we named “Unlock the OSAID” came in.

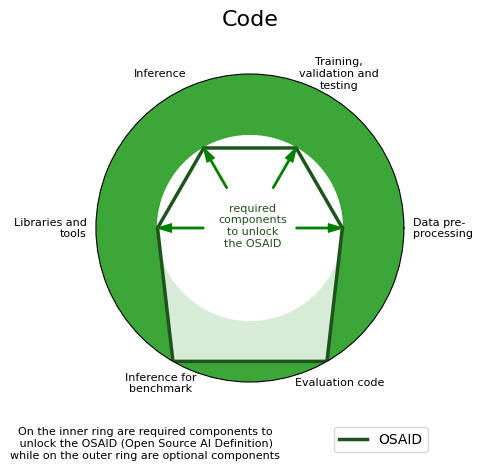

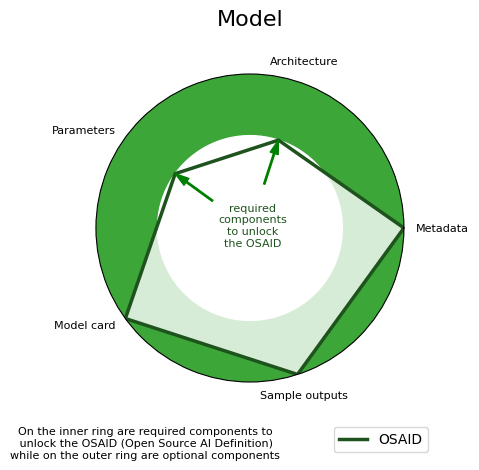

The OSI keyhole is a well recognized logo that represents the source code that unlocks the freedoms to use, study, modify, and share software. With the Unlock the OSAID, we played on that same idea, but now for AI systems. We displayed three keyholes representing the three domains these 17 components fall within: code, model and data information.

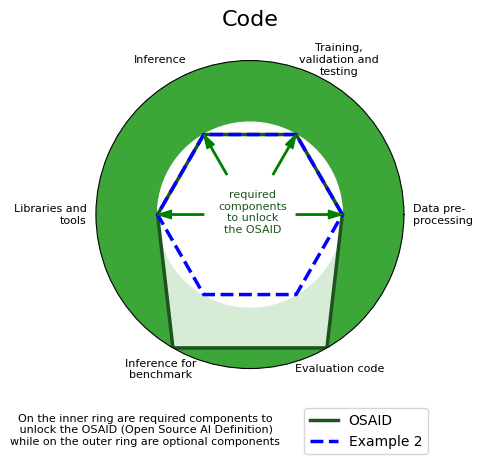

Here is the image representing the “code keyhole” with the required components to unlock the OSAID:

On the inner ring we have the required components to unlock the OSAID, while on the outer ring we have optional components. The required code components are: libraries and tools; inference; training, validation and testing; data pre-processing. The optional components are: inference for benchmark and evaluation code.

To fully unlock the OSAID, an AI system must have all the required components for code, model and data information. To better understand how the “Unlock the OSAID” visualization works, let’s look at two hypothetical AI systems: example 1 and example 2.

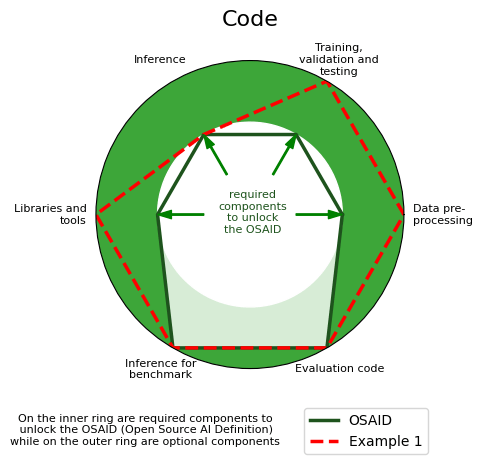

Let’s start looking at example 1 (in red) and see if this system unlocks the OSAID for code:

Example 1 only provides inference code, so the key (in red) doesn’t “fit” the code keyhole (in green).

Now let’s look at example 2 (in blue):

Example 2 provides all required components (and more), so the key (in blue) fits the code keyhole (in green). Therefore, example 2 unlocks the OSAID for code. For example 2 to be considered Open Source AI, it would also have to unlock the OSAID for model and data information:

We received good feedback from participants about the “Unlock the OSAID” visualization. Once participants grasped the concept of the keyholes and which components were required or optional, it was easy to identify if an AI system unlocks the OSAID or not. They could visually see if the keys fit the keyholes or not. If all keys fit, then that AI system adheres to the OSAID.

Final thoughts: engaging with the community and promoting Open Source principles

For me, the highlight of PyCon US was the opportunity to finally meet members of the OSI and the Python community in person, both new and old acquaintances. I had good conversations with Deb Nicholson (Python Software Foundation), Hannah Aubry (Fastly), Ana Hevesi (Uploop), Tom “spot” Callaway (AWS), Julia Ferraioli (AWS), Tony Kipkemboi (Streamlit), Michael Winser (Alpha-Omega), Jason C. MacDonald (OWASP), Cheuk Ting Ho (CMD Limes), Kamile Demir (Adobe), Mariatta Wijaya (PSF), Loren Clary (PSF) and Miaolai Zhou (AWS). I also interacted with many folks from the following communities: Python Brazil, Python en Español, PyLadies and Black Python Devs. It was great to bump into great legends like Seth Larson (PSF), Peter Wang (Anaconda) and Guido van Rossum.

I loved all the keynotes, in particular from Sumana Harihareswara about how she has improved Python Software Foundation’s infrastructure, and from Simon Willison about how we can all benefit from Open Source AI.

We also had a special dinner hosted by Stefano to celebrate this special milestone of the OSAID, with Stefano, Mer and I overlooking Pittsburgh.

Overall, our participation at PyCon US was a success. We shared the work OSI has been doing toward the first release candidate of the Open Source AI Definition, and we did it in an entertaining and engaging way, with plenty of connection throughout.

Photo credits: Ana Hevesi, Mer Joyce, and Nick Vidal